It’s often said that there are two halves to the SEO battle: on-page or on-site (your own content, like page titles, headings and content) and off-site (links and mentions of your site on other domains), but this isn’t quite right. There is a third stream, and that is the technical—what happens on your site behind the scenes. Trying to start a standard SEO campaign without clearing technical considerations first is like trying to run with your shoelaces tied together.

We can find potential SEO problems on your site, and we can do it fast, with a combination of made-to-order and industry-leading tools and outstanding human expertise. Fix those and every single SEO action you take afterwards will bring in greater results.

Technical considerations

Technical SEO is indispensable, particularly for larger, older or more complex sites. Ensuring maximum crawl efficiency with clean sitemaps, properly declaring language and location targets within HTML, and carefully controlling duplicate and near-duplicate content can help make sure there is nothing holding back your SEO efforts in other area.

Sitemaps

Sitemaps help search engines navigate a whole site easily, and as SEO is all about showing off your content as effectively as possible, all sites should have one.

A sitemap is a list of all the URLs search engines should pay attention to, with a little additional information like priority (this is a suggestion or guideline for search engines, not a rule that they must obey) and update frequency.

The basic sitemap functionality can be extended in several different ways. For example, those who really want their images to appear in services like Google Image Search may benefit from a special image sitemap. Those with a lot of video content may want a video sitemap too.

Sitemaps can also be very valuable where content is international in nature. For example, you can use a sitemap to signpost the relationship between a group of pages providing the same content in different languages and help search engines make sure that the right content is served to users in the right location.

Once created, the sitemap (or sitemap index if there is more than one sitemap) should be uploaded through Google and Bing Webmaster tools and verified there.

Robots.txt

The robots.txt file is a simple complement to the sitemap, and lists URLs or folders that search engines need not index. This cuts down the amount of wasted time and ensures that search engine attention goes where it will do the most good.

Duplicate content and faceted search

Duplicate content is one of the most common problems we see, particularly on e-commerce sites. It can arise in several ways, but perhaps the most prevalent is in product categorization. Let’s say we have an online wallpaper retailer. A user can navigate to /wallpaper/blue and then to /wallpaper/blue/stripe or /wallpaper/stripe/blue. The content on both pages is the same, but there are two URLs competing against one another.

To deal with this problem developers can either enforce an order in URLs, or place an additional line of HTML stating that one of those page is a duplicate of the other. This is called a rel=”canonical” relationship.

The rel=”canonical” approach can also be used where there are a large number of choices available, resulting in long URLs like /wallpaper/blue/stripe/sort-by-price/room=bathroom.

Where a user can select multiple options in multiple categories, the number of possible URLs generated rises exponentially. A site with a few thousand products can end up with hundreds of thousands of URLs generated.

We could just cut these out of indexation with robots.txt and our sitemap, but it’s useful to have a Blue Stripe Wallpaper page included in the search index as this could easily attract a small but significant amount of traffic. Blue Stripe Bathroom Wallpaper is likely to have very little value as a landing page, and the sort-by-price adds no search value. So we don’t want this page indexed, and so the best practice is to include a rel=”canonical” relationship back to a parent page. This marks the page as a variation on something more significant and means that if anyone should link to the child page, the parent takes the value.

A full white paper on best practices for dealing with duplicate and near duplicate content is available upon request.

Bing and Google Webmaster Tools

Both Google and Bing provide a Webmaster Tools service (now called Search Console for Google), as a two-way conduit of information between your site and the search engine. Both allow you to upload and verify sitemaps, make declarations about geographical targets and provide other useful information to help search engines understand your site.

You can also receive information from Webmaster Tools services, for example when there has been a problem crawling your website. It’s well worth signing up to both services to make sure you stay informed.

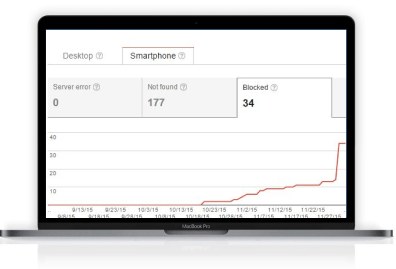

Server and client errors

Webmaster Tools records instances where the search crawler has encountered an server error—for example when the site is down for some reason—or a client error like a 404 page not found. We like to check these at least once a week, and also set up alerts so we find out about serious site problems as soon as possible.

We can also make a manual crawl with SEO software. This kind of crawler takes a page as input and follows all internal links across the site, checking for errors and sometimes recording other information along they way. A manual crawl is recommended after major changes have been made on site, to ensure it is error-free. This is also part of our standard practice at the beginning of any SEO campaign.

Content considerations

For SEO, content is all about demonstrating relevance to a particular search query. The first step is to decide what keywords you should be targeting—get this right and you’ll have a solid foundation to build on. We call this phase keyword research.

A great keyword target should be highly relevant and represent a good probability of eventual conversion. It should have enough traffic to justify the effort put into optimization (although long-tail keyword strategies can work well for sites with a large number of pages), and it should be achievable when the strength of competition is considered.

Once the keyword research is complete, it’s time to start applying the keywords on-page. First and foremost, we look to optimize the following:

- Page titles The page title is what you see in blue in search engine results pages and the text that appears at the top of your browser tab when you visit the page.

- H1 headings There should be just one H1 or primary heading per URL, placed high up on the page.

- Text content Every page should have some body text, as this is still the best way to tell a search engine what a page is about, and therefore demonstrate relevance to the chosen keyword/s. If it’s well written, this text is almost always useful for encouraging conversion as well. The placement of the text is taken into account, not just the content.

- H2 headings H2 or secondary headings are useful for SEO but also great for breaking up text and making it more readable.

- Meta description tags These tags don’t actually appear on the page, but can show up on search engine results pages as shown. They don’t have a strong influence on rankings but can certainly increase click through rates and therefore lift visitor numbers.

- URL structure Easily interpreted URLs are great for SEO and make analytics easier too.

Depending on the project, we might also look at:

- Image alt description tags These are short descriptive sentences outlining image contents, and are good for improving access for the visually impaired too.

- Image file names

- Menu structure and internal links

- Footer contents Having a full street address in a site-wide footer is a strong local SEO signal.

- Structured data Sometimes it’s useful to include extra tags in HTML, to help search engines understand things like addresses, product reviews and video metadata.

Linkbuilding and outreach

Once a site has been rendered as efficient and technically clean as possible, and well-planned keywords deployed on site with good content, it’s time to start looking at promotion away from your own domain. When relevance has been proven, you must then demonstrate that the site is authoritative and trustworthy enough to supply the needs of the searcher.

Like human beings, search engines judge websites by the quality of their friends. Sites with links from relevant, high authority, choosy domains will gain some authority by association. Those with few links will remain an unknown quantity, and those with only links from poor quality or spammy sources will naturally be tarred by the same brush.

To aid understanding of what makes a quality link, consider a new haute cuisine chef trying to make a name. An endorsement from a shoe maker means only a little, because shoe makers have very little culinary expertise. An endorsement from a food critic means more, because food critics know what makes a good chef. If that food critic happens to be well known, is willing to publicize the review widely and is known to be very picky about who they recommend, the endorsement is much more powerful.

Carrying the analogy a little further, an endorsement the food critic was paid to give means very little, and this kind of tactic could even be seen as cheating.

Along the same lines, a good link is:

- From a relevant site

- From a well-established site

- Prominently placed

- One of only a few, not a huge list

- Never the result of money changing hands.

White hat and black hat, ethical and unethical

Paying for links directly violates Google’s Webmaster Guidelines, and (like any kind of linkbuilding shortcut) can result in damage to rankings rather than improvements. Both algorithmic and manual ranking penalties are there to make sure we all play by the rules and rankings are as fair as possible, although there are unfortunately still plenty of people out there ready to risk their client’s traffic with cheap, spammy, short-high volume and low quality links. These operators are known as ‘black hat’ or unethical linkbuilders.

The best way to ensure that a linkbuilding strategy is safe is to think of building relationships rather than getting links. Who do you really want to have a business relationship with online? How can you work with influencers in your marketplace to build something together? What has your site got to offer others in terms of resources they will want to link to?

Our linkbuilding strategy is different for every client, but here are some of the more standard methods we use to create both links and useful online relationships. All of them are ethical (or ‘white hat’). All meet the best of quality benchmarks—they may well bring in traffic through the link, as well as be helpful for SEO more generally.

- Product reviews from very select bloggers. Only the most interesting and least commercialized blogs will do! Often this means finding a niche outside the blogging mainstream.

- Discounts for membership organizations. By offering a special member discount to a relevant organization— and this could anything from a local chamber of commerce or trade body, to a student club— it’s possible to get great exposure to people in your market, some goodwill and a link or two as well.

- Creation of truly useful content. Having handy apps and widgets or just beautifully crafted, useful blog posts on site helps attract links organically, and can also generate social media attention. Often some initial promotional work needs to be done to get the content in front of an audience though—if nobody sees it, it won’t be linked or shared.

- Competitions. Hosting a competition can bring in links whether you choose to go it alone or host with a partner organization. This could be as simple as donating a prize for a competition hosted by a blogger, or as complex as launching an industry scholarship and ensuring all online and offline PR is taken care of.

- Speaking opportunities. We encourage all clients to speak publicly at industry conferences or even host their own webinars (with a little help), as these occasions often bring in powerful links and generate excellent social media attention.

Social media and nofollow links

From a technical standpoint links fall into one of two categories: nofollow and dofollow. Unless otherwise specified, links are dofollow, which means that search engines will use them and they are seen as passing SEO value. Nofollow links have an added tag in their HTML which prevents this.

Almost all links from sources like Facebook posts, Tweets, and Pinterest pins are nofollow. So are the links from paid advertising networks like AdWords and Bing Ads, as are links from most high quality forums (including links in both posts and profiles) and blog comments.

Part of the reason for this is that social platforms, blogs and forums all want users to post only when they have something legitimate to say or share, and to keep the actions of spammers to a minimum. They don’t want people posting just to get links.

Therefore, posting your links to forums or in blog comments is not a helpful strategy unless you expect people to actually use those links. If you are answering a question providing a legitimate answer there is nothing wrong with doing this, but it won’t help your rankings at all.

Posting on social platforms is a little different, in that social activity can have an impact on SEO despite the nofollow nature of the links. It’s a common mistake to assume that if Google sees you have 500,000 Facebook followers they will know you have a quality website. In reality, the most effective social profiles are the ones that see plenty of interaction, not just have a large, silent (and possibly bought) audience.

Google My Business

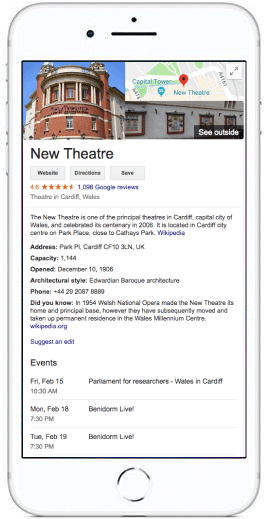

We recommend that all businesses start by claiming their Google My Business page. My Business takes in the old Google Plus Local (which in turn was a replacement for Google Places, which was a replacement for Google Business Directory) and Google Plus business pages. It’s a place you can provide information like address, opening hours, contact phone number, business type and photos, and also acts as a hub for reviews.

Claiming and populating a Google My Business listing verifies your business address, which is particularly useful for local SEO. It also means you can get additional ‘real estate’ in brand-related Google searchers, as below. This appears to the right of standard search engine results for a local cinema.

Facebook is generally a good option for B2C clients, although plain unpaid posting is much less effective than it used to be. Facebook now shows relatively little content from brands, even when the user has chosen to follow them.

However, it can still be very useful and a good source of likes, comments and shares as well as traffic.

Indispensable for B2B clients and sometimes useful for B2C, Twitter is a good place to demonstrate excellent customer service and showcase your knowledge and thought leadership.

Businesses with a visual focus (for example restaurants, home decor retailers and fashion sites) can all do very well out of Pinterest. Unlike Facebook, the service is designed to pass users out to external sites rather than keep them on-platform. For some of our clients, Pinterest now outsells Facebook.

Another must for B2B clients, LinkedIn is now much more than a place to put your CV. It’s now a place to showcase company products and services, gather reviews and endorsements from known, verified sources, publish technical content and connect with others in your industry.

We usually recommend joining and participating in a few relevant discussion groups as well as ensuring your company page is attractive to look at and well recommended.

Youtube

Youtube is a great place to gain an audience with how-to videos, and working with popular Youtubers can also bring in excellent and far-reaching results.